Page Not Found

Page not found. Your pixels are in another canvas.

A list of all the posts and pages found on the site. For you robots out there is an XML version available for digesting as well.

Page not found. Your pixels are in another canvas.

This is a page not in th emain menu

Published:

Published:

基于openmp编程模型,先从粗粒度的层面对算法进行优化,此时就需要考验对算法本身的理解程度和基本的编程算法能力,此时要多次迭代,正确分析算法复杂度;后续进入细粒度层面优化,主要是在计算和访存之间做trade-off,向量化是一个很强大的工具,向量寄存器yyds!另外有些开放的问题,比如循环展开最合适的次数等等…

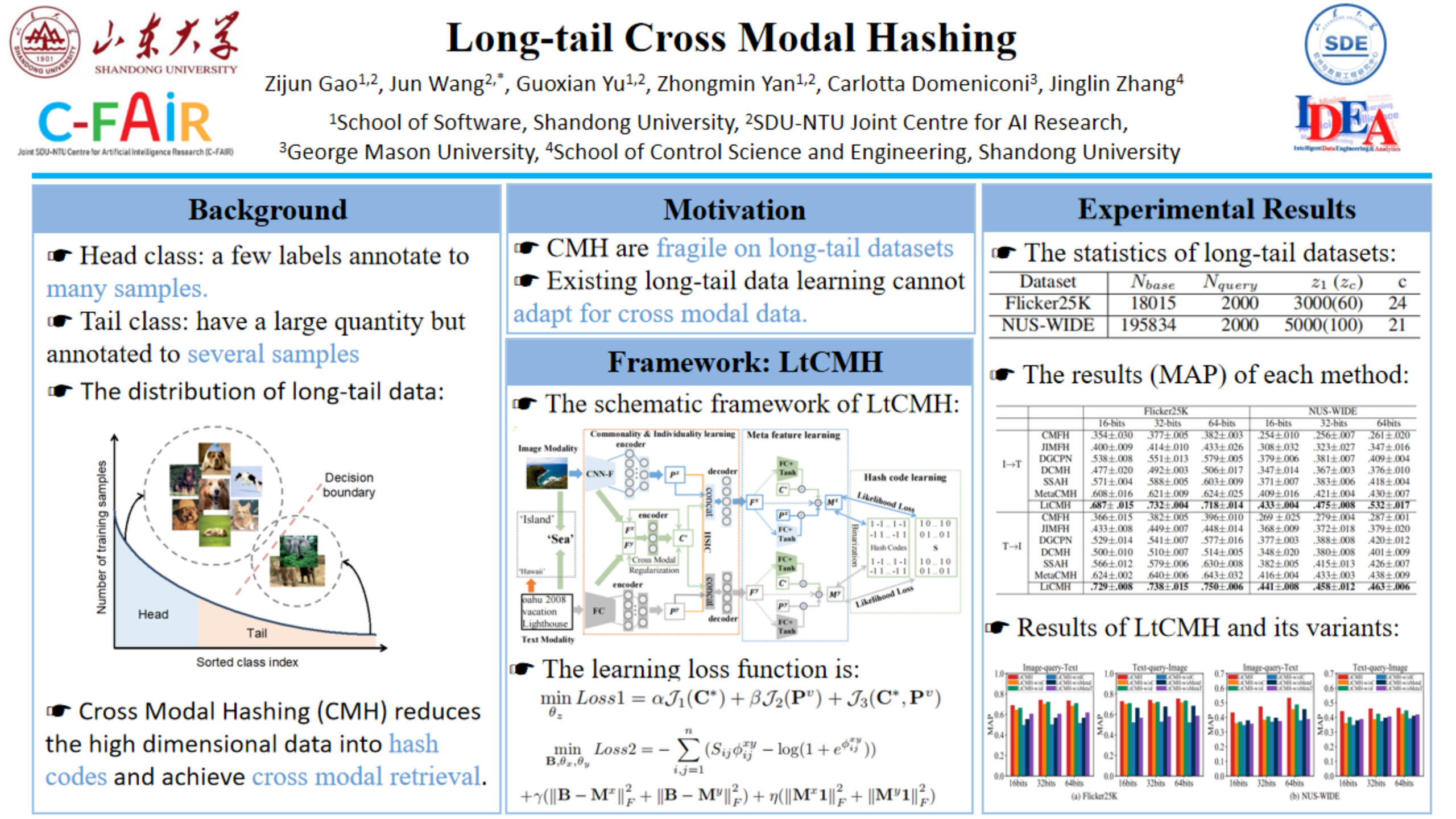

It’s an introduction video to the paper presented at the AAAI-23 Conference

Published in 37th AAAI Conference on Artificial Intelligence (AAAI) (CCF A), In Print., 2022

Existing Cross Modal Hashing (CMH) methods are mainly designed for balanced data, while imbalanced data with long-tail distribution is more general in real-world. Several long-tail hashing methods have been proposed but they can not adapt for multi-modal data, due to the complex interplay between labels and individuality and commonality information of multi-modal data. Furthermore, CMH methods mostly mine the commonality of multi-modal data to learn hash codes, which may override tail labels encoded by the individuality of respective modalities. In this paper, we propose LtCMH (Long-tail CMH) to handle imbalanced multi-modal data. LtCMH firstly adopts auto-encoders to mine the individuality and commonality of different modalities by minimizing the dependency between the individuality of respective modalities and by enhancing the commonality of these modalities. Then it dynamically combines the individuality and commonality with direct features extracted from respective modalities to create meta features that enrich the representation of tail labels, and binaries meta features to generate hash codes. LtCMH significantly outperforms state-of-the-art baselines on long-tail datasets and holds a better (or comparable) performance on datasets with balanced labels.

Recommended citation: http://zjgao02.github.io/files/2211.15162.pdf

Published:

This is a description of your talk, which is a markdown files that can be all markdown-ified like any other post. Yay markdown!

Published:

This is a description of your conference proceedings talk, note the different field in type. You can put anything in this field.